A Short Note on Stratified Sampling

Table of Contents

Stratified sampling is a Monte Carlo (MC) method to estimate an integral that consist a distribution and a function of interest

via partitioning into smaller groups, called strata.

It is a type of quasi-Monte Carlo (QMC) method as it introduces deterministic procedures into the MC estimation.

Same as crude Monte Carlo the estimation is still unbiased;

however, the variance of the estimator can be smaller than crude MC.

(Crude) Monte Carlo

The (crude) Monte Carlo estimator of is

where and

is the number of MC samples.

This estimator is an unbiased1 estimator of the original target :

Now let’s look at the variance of the estimator .

We first need to define

, which is the variance of

under the distribution

. Now the variance of interest is

As it can be seen, the variance drops with the order of by using more samples.

Example

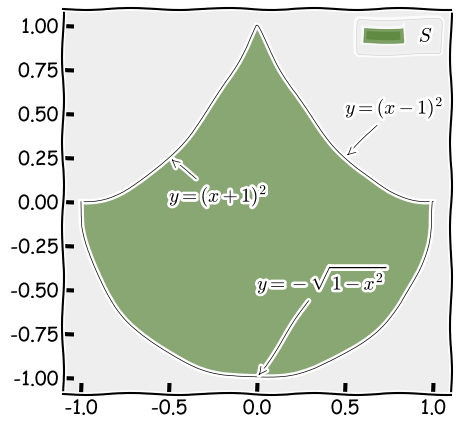

I now use an area estimation example2 to demonstrate how the empirical estimate of the variance of the crude Monte Carlo estimator changes as the number of samples increases.

The area I am interested in estimating is illustrated in the figure below.

Figure 1: The area

This is equivalent to computing the integral below

where is the indicator function and

is the uniform distribution over

.

The crude MC estimator for is then

where and

is the MC samples.

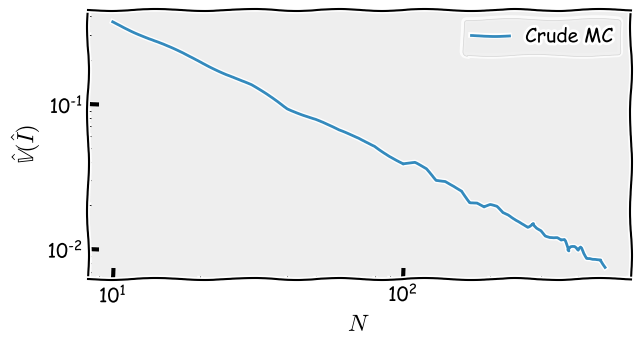

I now visualise how the variance of changes as

increases.

Figure 2: Variance convergence of crude MC

Note that both the x- and y-axis are in logarithm scale.

As we can see, it matches our analysis results, which is .3

Stratified sampling

Now, we look at how stratified sampling works and

how the variance of the corresponding estimator changes depending on different stratifications.

For the sake of simplicity, we consider the case where a

-dimensional uniform distribution for the integral in equation 1.4

We first partition

into

strata s.t.

.

Then, as the simplest way of performing stratified sampling,

we uniformly draw independent Monte Carlo samples for each stratum

,

and the overall estimator is given as below

where are samples that lie in

and

is the number of MC samples in stratum

.

The mean of within each stratum

is

and the corresponding variance is

As it is mentioned in the beginning, is still an unbiased estimator of

.

We can validate this by computing the mean of

The variance of is then

Effect of different allocation strategies

We are interested in comparing this estimator against (crude) Monte Carlo.

In particular,

we’d like to show that when is allocated proportionally to

the estimator can only decrease in variance as compared to (crude) Monte Carlo.

In order to show this,

we need to rewrite the variance of crude Monte Carlo in terms of strata.

Let’s introduce - the stratum that contains

:

.

By the law of total variance, we have

Thus

Note that in the case of proportional allocation,

because ,

which is smaller than .

Although it is not the optimal allocation5,

proportional allocation as applied to stratified sampling will never increase the variance over that of crude Monte Carlo.

Also, note that a poor allocation can also increase the variance of the estimator in comparison to crude Monte Carlo.

Effect of different stratification methods

It is interesting to note that, if is in 2D and is dominated by the horizontal direction more than the vertical direction,

partitioning in the horizontal direction would be more effective than partitioning in the vertical direction.

We will see it in the example soon.

Example (continued)

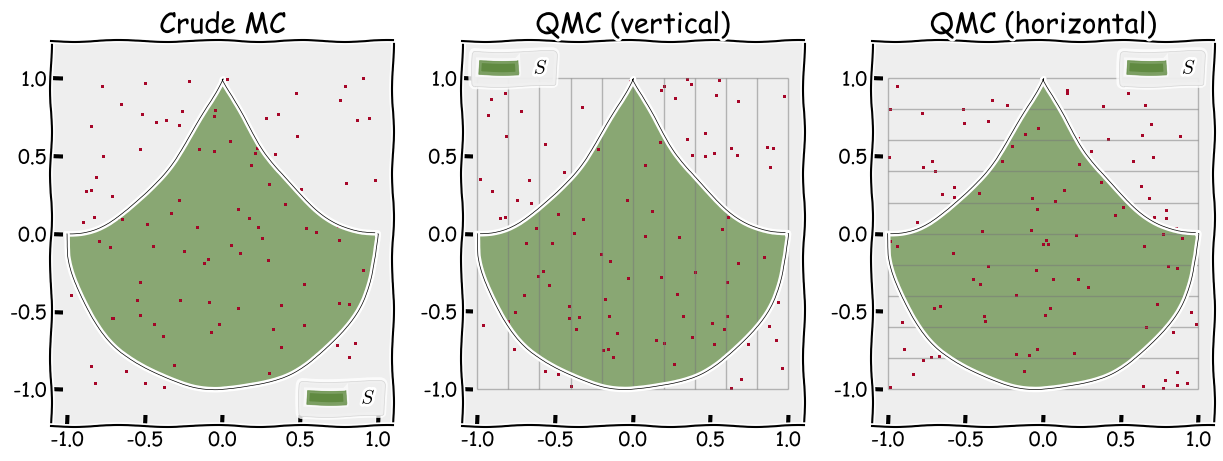

We continue with the example above by looking at 2 ways of stratifying the previously defined area.

- Vertically partitioning the space into 10 parts

- Horizontally partitioning the space into 10 parts

We illustrate where the samples are located for each method and for crude Monte Carlo in the figure below.

Figure 3: Different ways of sampling: crude MC (left), stratified sampling with vertical partitioning (middle) and stratified sampling with horizontal paritioning (right)

Notice that there are 100 samples in total for each method and that, for the stratified sampling methods, there are exactly 10 samples in each box.

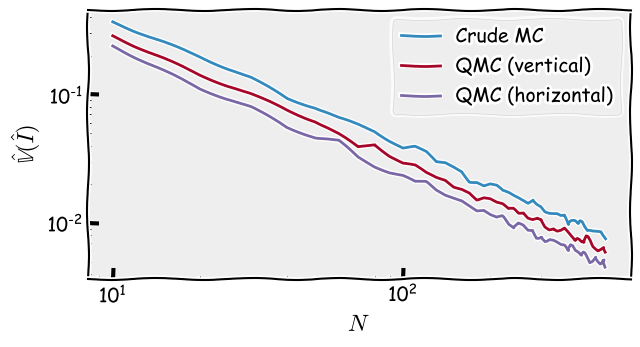

Now what is interesting is the rate of variance of each estimator as the number of samples increase (shown below).

Figure 4: Variance convergence: crude MC v.s. quasi MC with different paritioning

As it can be seen, both QMC methods converge faster than crude Monte Carlo, as expected by our analysis. What’s more interesting is that horizontally partitioning the space is more effective than vertically partitioning it. This is because the pre-defined shape is dominated by the y-axis and not the x-axis. This domination can be explained by the shape’s symmetry along the y-axis and not the x-axis which means that the y-axis contains more information about the shape.